Facilitating communication across generations

Preeti Sivasankar is head of Purdue’s Department of Speech, Language, and Hearing Sciences and also studies the factors that can impact voice quality. (Purdue University photo/John Underwood)

Purdue’s speech, language and hearing sciences professionals work with all ages to resolve communication disorders

When Marion Underwood describes the life-altering care speech-language pathologists provide, she does so with authority.

Underwood witnessed it firsthand when her mother suddenly lost the ability to swallow late in life.

“We faced a real emergency; it was a terrible experience for her,” says Underwood, dean of Purdue’s College of Health and Human Sciences, which is home to the University’s world-renowned Department of Speech, Language, and Hearing Sciences (SLHS). “But within a day or two, her assisted living community got a speech pathologist who gave her some thickening agents and some exercises, and soon the problem was solved. She could swallow again. Her quality of life immediately got better because that speech-language pathologist could help her learn to swallow. It’s amazing what they are able to help people do.”

Improving a patient’s quality of life is a central mission across the profession, whether treating an older person suffering from dysphagia like Underwood’s mother, evaluating an infant’s earliest communication skills or researching issues faced by those at any stage of the life course between those two disparate age groups.

That across-the-life span scope of SLHS practitioners’ work frequently surprises those who are only vaguely familiar with the field, says SLHS head Preeti Sivasankar.

“Most people have met a speech therapist when they were in school, for helping say their Rs and other speech sounds correctly,” Sivasankar says. “But professionals we train support individuals across their life span.”

In fact, the communication issues SLHS speech-language pathologists and audiologists address are often critical.

“What they do, what they study, what they help people with is of such fundamental importance,” Underwood says. “If you’ve ever had a child who seems like they can’t hear, how in the world can you know whether an infant can’t hear? How do you figure that out? If you’ve ever had a child who doesn’t speak, who’s late in talking, that’s so frightening as a parent. What do you do about that? If you’ve ever had a child with autism or a child who stutters? If you’ve ever had an older person in your life who, all of a sudden, their hearing starts to go?

“These are programs that focus on helping human beings hear, speak, swallow and read. These are fundamental human capabilities. This is what makes us human, that we can do these things — and when we can’t, the quality of our lives declines.”

These are programs that focus on helping human beings hear, speak, swallow and read. These are fundamental human capabilities. This is what makes us human, that we can do these things — and when we can’t, the quality of our lives declines.

Marion Underwood

Dean of Health and Human Sciences

Purdue President Edward Elliott instructed the University’s deans to create the SLHS program in 1935 with the goal of addressing speech or hearing impairments that might harm Boilermaker students’ employability.

Purdue hired Max David Steer, then a 25-year-old PhD student in the University of Iowa’s speech pathology program, to direct a new speech clinic at University Hall. Each new Purdue student would undergo a speech and hearing evaluation at the beginning of fall semester, introducing the first university testing program of its kind in the U.S.

Today, SLHS clinicians still give speech and language evaluations and treatment to students, but the department offers so much more than that — to those both inside and outside the immediate Purdue community.

Residents of Greater Lafayette greatly benefit from SLHS clinics, summer camps and other programming available to address factors that can disrupt the communication process. SLHS students benefit even more from the practical learning opportunities, mentorship and world-class instruction available in a speech-language pathology graduate program that ranked third and an audiology doctoral program that was ninth in U.S. News & World Report’s most recent set of health-school rankings.

“What brings me the most joy is the mentoring of students and seeing them succeed,” Sivasankar says. “Seeing how they blossom and grow as speech pathologists, as scientists — that I would say is the favorite part of my job.”

Like Underwood, Sivasankar has parental connections to the good done within her field. Her introduction to speech-language pathology came when her father received treatment for a moderate stutter. She later developed a research interest in voice issues when her mother experienced occasional vocal fatigue after teaching.

Now, Sivasankar is leading a multidisciplinary research team studying the environmental and biological factors that might influence why some people experience voice problems when others do not.

As evidenced by Sivasankar’s research and that of her faculty colleagues whose work is profiled below, SLHS practitioners’ work is certainly not confined to any age group. They are committed to improving the quality of life for all communicators.

THE EYES HAVE IT:

ARIELLE BOROVSKY

Attempting to determine what the youngest communicators know can be tricky. Some are unable to communicate, while others vary in their interests and ability to stay on one task — the default setting of many a 2-year-old.

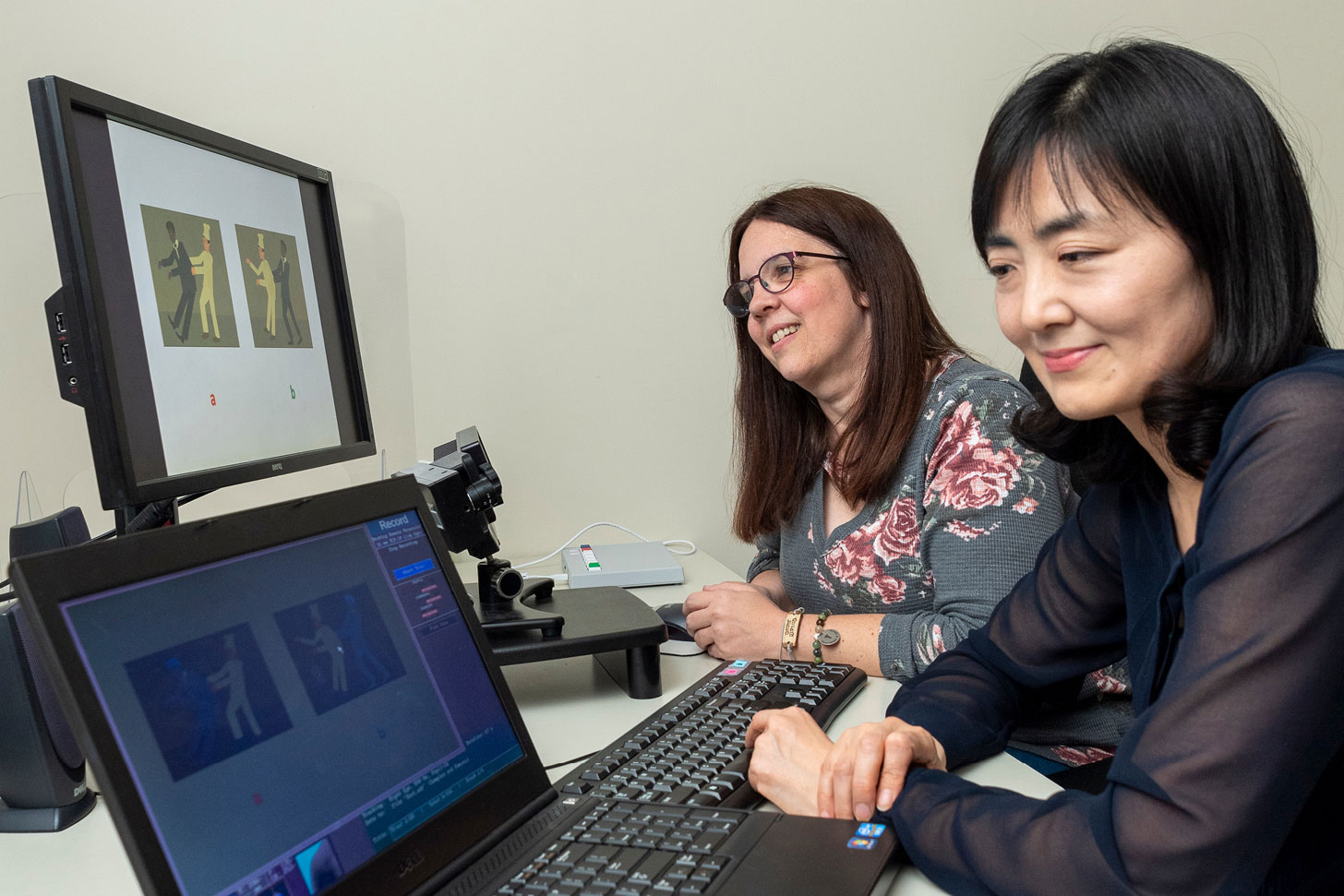

Associate professor Arielle Borovsky and her research team have to get creative to clear that potential hurdle. They turn study participation into a fun activity.

One of the team’s unique research techniques involves tracking a child’s gaze as they participate in different tasks. This method involves placing a sticker on the child’s forehead, just above their eyebrow.

The sticker interacts with sensors on a high-speed camera that track the child’s eye movements while the child is playing face-to-face games or watching a video on a computer screen. When the child’s eyes focus on the objects mentioned during the game or video, the researchers gain a real-time snapshot of the child’s vocabulary and language-recognition skills.

Arielle Borovsky, right, and her research team gain an understanding of children’s vocabulary and language-recognition skills by tracking their eye movements while playing games or watching videos. (Purdue University photo/John Underwood)

“This builds on something they’re already interested in doing,” Borovsky says. “This helps a child show us what they know without us having to put too many demands on them.”

Approximately 7% of school-age children contend with a developmental language disorder. Many children’s issues, however, go undiagnosed for the first several years of their education, making it difficult to catch up with peers.

Borovsky studies children’s word-learning and language-processing skills in an effort to identify as quickly as possible which toddlers and preschoolers might need additional support, as well as which factors help children learn to communicate. One of her current studies follows children from 18 months to 4 years old, although her lab also works with children who are even younger.

“The earlier we can identify, the sooner we can help,” says Borovsky, director of Purdue’s Language Learning and Meaning Acquisition Laboratory. “Over time, children can experience learning delays. It’s way better to identify a child early and help them build their strengths over time. By the time they get to school, they may have missed many learning opportunities. With earlier identification, before school entry, we hope to develop tools to help all children succeed.”

Borovsky’s research group is also studying whether the structure of information contained in the words a child knows may help predict vocabulary growth and later outcomes.

“We can do that in a level of detail now that hasn’t been possible before, and we can also understand that through the context of the words that they’re actually understanding in eye-tracking measures that we use,” Borovsky says

With earlier identification, before school entry, we hope to develop tools to help all children succeed.

Arielle Borovsky Associate professor of speech, language, and hearing sciences

STUDYING MULTISENSORY LEARNING:

NATALYA KAGANOVICH

Judging by the “swimming cap” covering their heads, it might appear that the school-age children who visit associate professor Natalya Kaganovich’s Auditory Cognitive Neuroscience Laboratory are preparing to take a fun dip in the pool.

Not so, but Kaganovich’s research team still hopes study participants who don the special headwear will have fun without getting wet. The cap is equipped with 32 electrodes that record tiny electrical signals off the scalp, enabling the researchers to monitor the children’s neurological responses to the sounds and images embedded in the entertaining activities they complete while wearing the cap.

Utilizing the event-related-potentials research technique is especially valuable with children because it does not require them to carry out a task. Instead, researchers can study processes like memory, language and attention simply by recording how a child’s brain activity changes in response to various stimuli.

Kaganovich is working on a National Institutes of Health-funded grant project that studies multisensory speech perception: the ability to use visual speech clues — such as watching someone’s mouth when they’re speaking in a noisy room — to augment what one hears. Her research team wants to determine whether developmental language disorder, or DLD, is strictly an auditory condition or whether it extends to other modalities that are critical for language acquisition.

DLD affects approximately 7% of children and manifests itself as significant difficulty in producing and/or understanding language without an obvious cause.

While playing a video game, a child wears a cap that enables associate professor Natalya Kaganovich, center, and her research team to study the child’s neurological responses to stimuli embedded in the activity. (Purdue University photo/John Underwood)

“What we are finding in my lab is that children with developmental language disorder cannot match speakers’ lip movements with heard words as easily as children with typical language development can. This means in a noisy place like school, they will be at a disadvantage,” Kaganovich says. “Impairments in combining auditory and visual information may have many causes, such as weakness in processing shape or movement, inability to fully allocate attention to visual information or difficulty remembering how articulations of individual words look like.

“We want to understand what is and isn’t impaired in children with developmental language disorder and how exactly such impairments influence language acquisition and use.”

The long-term objective is to establish more effective therapeutic techniques for children with language difficulties, ideally reducing the likelihood that they fall behind their peers as their academic workload becomes more rigorous.

“If we know that children with DLD are struggling with incorporating lip movements, can we add some component to training to help them in a noisy place?” Kaganovich says. “I don’t think we’re quite there yet because we’re still in the process of trying to understand what is happening with these children. Once we know, hopefully it can then transition into therapies.”

We want to understand what is and isn’t impaired in children with developmental language disorder and how exactly such impairments influence language acquisition and use.

Natalya Kaganovich Associate professor of speech, language, and hearing sciences

LOVE OF LITERACY:

CHENELL LOUDERMILL

Chenell Loudermill grew up in a family of helpers who taught her the importance of assisting others in pursuit of their goals. Once she began working as a speech-language pathologist and saw how many children struggled specifically with reading, Loudermill knew she had found her purpose. In addition to her focus on literacy, Loudermill also works with children who have autism or difficulty with social interaction.

“What we have learned from research is that every child can learn to read,” says Loudermill, a clinical professor. “There’s no reason for a child to not know how to read, which is good news. If they just get the help they need, this crisis can be avoided.”

For those who never gain adequate reading skills, the consequences can be severe.

Dyslexic children who receive effective instruction in kindergarten and first grade are far more likely to read at grade level than those who do not receive assistance until third grade, according to the International Dyslexia Association. And 74% of the third graders who are poor readers will remain poor readers in ninth grade.

Chenell Loudermill, director of clinical education in speech-language pathology, helps children build literacy skills and also works with those who have autism or social-skills difficulties. (Purdue University photo/John Underwood)

And the issues only continue to mount.

“The high school dropout rate of kids who cannot read increases, of course, as they get older,” says Loudermill, who is also director of clinical education in speech-language pathology. “Poor readers are four times more likely to drop out of high school. Poor reading impacts behavior, and children who have reading difficulties are at a higher risk for depression. You can start to see this as early as third or fourth grade. It has a long-lasting impact on their lives.”

Reading problems continue to plague some of these students well into adulthood. Noting that “quality of life is significantly diminished for illiterate adults,” Loudermill shared statistics from the National Adult Literacy Survey showing that 70% of incarcerated adults couldn’t read at a fourth-grade level.

Loudermill is passionate about helping those she assists — typically K-12 students — overcome difficulties with speech sound production, language and literacy, and social aspects of language in an effort to help them avoid these negative potential outcomes. Few aspects of her job are more satisfying than witnessing gains made by her clients, whether it’s one of her autistic clients or a young learner whose reading skills are developing.

“One of the things that we really enjoy is seeing how they have progressed through the years, or having families reach back out to you and say, ‘Look at my child now!’” Loudermill says. “You don’t always know the impact on a family or on a child’s life you have in the moment. But later, when you learn that, it is very rewarding.

“Ideally, we want to address these issues early in life and hope they won’t need us anymore. We know that early intervention is key.”

There’s no reason for a child to not know how to read, which is good news. If they just get the help they need, this crisis can be avoided.

Chenell Loudermill Clinical professor and director of clinical education in speech-language pathology

EXAMINING VOCAL VARIANCES:

PREETI SIVASANKAR

If someone’s voice is unique like a fingerprint, so too are the usage, environmental and genetic factors that can impact voice quality. Preeti Sivasankar is fascinated by how those factors impact each speaker differently.

“Two people may use their voice to the same extent,” says Sivasankar, whose Voice Laboratory studies the factors that can create voice problems. “They both go to the game, they’re both yelling and they both feel soreness in their throat, but only one of them loses their voice. Why is that?”

Determining the answer can be a complicated process. The individualized nature of this dilemma makes it tricky to determine the extent to which a given factor affects a speaker’s voice, as well as the steps necessary to neutralize the problem.

“In some sense, it’s a very simple question: Why does someone have a voice problem? But the reason it’s really hard to tease apart is because of this multifactorial kind of intersection,” Sivasankar says. “If I were to draw a Venn diagram, each person has a very unique profile because their environment or lifestyle factors may dominate how their voice is produced and sounds. For someone else, it may be the genetic component. That’s what makes it challenging.”

Sivasankar has two lines or research, and both have been funded by the National Institutes of Health. She studies tissue biology in an effort to glean insight on what causes vocal-fold inflammation. She also runs a human-subject lab that’s mission has practical value to those who grapple with vocal fatigue.

Her mother was one of those people during her teaching career. The need to consistently communicate while teaching placed a noticeable strain on her voice, and Sivasankar knows the same holds true for many faculty colleagues at Purdue.

“In the moment when someone is fatigued, how can we prevent it from getting worse?” she asks. “I’m thinking about the professor on campus who teaches three classes back-to-back. Maybe they lecture in Loeb Playhouse, start feeling fatigue and now have to walk to Lilly to teach another class. In that 10 or 15 minutes, is there something they can do to reset their larynx and voice box? We’re asking those questions so that we can identify solutions that will be immediately helpful to speakers.”

Roughly 10% of Americans suffer from voice problems, so identifying the root causes of these issues — and determining how to treat them more effectively — could provide a massive cost-savings and quality-of-life benefit for millions. There are still many questions left to answer, however.

“The reason we ask these questions is that if we know the why, then we know the how and what to do,” Sivasankar says.

Aiding language recovery:

Jiyeon Lee

The eye-tracking technique Borovsky uses with the youngest language learners also can provide invaluable insights among older adults who have lost the ability to communicate due to aphasia, a language disorder typically caused by a stroke or brain injury.

According to the National Aphasia Association, stroke is the third-leading cause of death in the U.S., and about a third of stroke survivors acquire aphasia.

“Eye movements are usually intact in stroke patients, and they’re timely and almost automatic, so you tend to look at things that you are thinking about,” says associate professor Jiyeon Lee, who leads Purdue’s Aphasia Research Laboratory. “Or, when you want to talk about something, your eye movements naturally are drawn to what you’re thinking. So, we use eye movements as a window to study aphasia.”

Aphasia results from damage to the area of the brain that controls language functioning. As part of their efforts to understand how aphasia affects someone’s ability to produce and comprehend speech, Lee and her colleagues also study how a typical brain processes everyday conversation.

“Our brain is trying to get some kind of meaning out of the stream of words that we hear. To do that, we use the rules of language,” Lee says. “Essentially, our first line of research is to understand when we produce language or comprehend language, how we process information, and how that information processing is different between persons with aphasia and persons without aphasia.”

Associate professor Jiyeon Lee and her colleagues study how a typical brain processes everyday conversation while aiming to understand how aphasia affects someone’s ability to produce and comprehend speech. (Purdue University photo/John Underwood)

Once a person is given an aphasia diagnosis, the treatment objective becomes maximizing their recovery of language skills, Lee says. Her research group recently received grant support from the National Institutes of Health to study language learning among aphasia patients.

“If you think about how people learn language, you learn it in a very natural setting by talking to other people or by being immersed in a communicative environment,” Lee says. “We try to use those naturalistic inputs and study whether persons with aphasia learn rules of language and can reuse them in their own speech. The long-term goal is to develop cost-effective treatments for aphasia.”

Because they study different age groups using similar eye-tracking techniques, Lee and Borovsky see an opportunity to collaborate and potentially gain a broad understanding of principles that are shared across generations.

“One thing that I think would be really cool is for us to develop tasks that could give us a life span perspective on language learning and development using the exact same method,” Borovsky says. “I would love to see us be able to do that.”

With these shared principles in mind, Lee says she also enjoys interacting with colleagues who work with other age groups. She is able to glean knowledge from those who study language learning and processing in young children or young adults and then apply their insights to her work with persons who have aphasia.

The specifics certainly change depending on the age group or condition in question, but Lee still sees clear commonalities between her work and that of her colleagues — no matter how old their typical study participants may be.

“I think we are ultimately approaching the same question, but using different populations, different age groups,” she says. “If you look at it, the underlying theme is really the same. We are trying to understand how the language really works, how it’s learned, how it’s impaired and how it can be restored.”

Our first line of research is to understand when we try to produce language or comprehend language, how we process information and how that information processing is different between people with aphasia and people without aphasia.

Jiyeon Lee Associate professor of speech, language, and hearing sciences